B21, China Town Mall, Midrand

- Shipping Timeframes: All orders are processed within 2-5 business days (excluding weekends and holidays). After your order has been processed, the estimated delivery time is before 22 Apr, 2026, depending on customs, Please note that due to high demand, some items may experience longer shipping times, which will be communicated at order confirmation email.

- Order Processing Time: Please allow 2-5 business days for us to process your order before it is shipped . Orders placed after 16:00 on Fridays, or during weekends and public holidays, will begin processing on the next business day. Processing times may be extended during peak seasons or sales events.

- Manufacturing Time: Some products needs manufacturing time, the manufacturing process will take approximately 10-30 business days depending on the product. This timeframe may vary depending on the complexity of the product and current demand. but this will be communicated with you during order confirmation.

- Returns and Exchanges: We offer a 30-day return policy for most items. If you are not completely satisfied with your purchase, you may return it within 30 days of receipt for a refund or exchange. Items must be unused, in their original packaging, and accompanied by proof of purchase. Return shipping costs are the responsibility of the customer, unless the item was damaged or defective upon arrival.

1. What is the V100 32G GPU for Desktop Training?

The V100 32G is an NVIDIA Volta-architecture Tensor Core GPU optimized for deep learning, machine learning and high-performance computing. This product ships with 32 GB of high-bandwidth memory and 5,120 CUDA cores to accelerate training and inference workloads on desktop systems.

2. What are the key specifications?

Key specifications include 32 GB memory capacity (HBM2), 5,120 CUDA cores, a main clock frequency listed at 1370 MHz, Volta Tensor Cores for mixed-precision acceleration, and a one-year warranty (per the product listing).

3. Which precisions does the V100 support (FP32/FP16/FP64)?

The V100 supports multiple numeric precisions: FP32 (single-precision), FP16 (half-precision) accelerated by Tensor Cores for mixed-precision training, and FP64 (double-precision) for HPC workloads. Mixed-precision workflows typically deliver the largest training throughput improvements.

4. Is this card compatible with popular deep learning frameworks?

Yes. The V100 is widely supported by major frameworks such as TensorFlow, PyTorch, MXNet and others via NVIDIA CUDA and cuDNN libraries. Use the appropriate CUDA toolkit and framework builds for optimal performance.

5. What software and drivers do I need to use the V100?

Install the appropriate NVIDIA display/compute driver for your OS and the CUDA toolkit version recommended by your framework. Also install cuDNN and any framework-specific GPU builds. Refer to NVIDIA release notes for exact driver/CUDA version compatibility.

6. What system requirements and slot does the card need?

The V100 typically requires a PCIe x16 slot on the motherboard and occupies a dual-slot, full-height bay in most desktop cases. Confirm the exact form factor with the seller, as there are different OEM variants.

7. What power supply do I need?

The V100 is a high-power accelerator and requires a robust system power supply and appropriate external PCIe power connectors. Exact power draw varies by variant; check the vendor's specification sheet for the card's TDP and required connectors and ensure your PSU has sufficient headroom.

8. Does the V100 support NVLink for multi-GPU scaling?

NVLink support depends on the specific V100 variant. The SXM2 variant supports NVLink for high-bandwidth GPU-to-GPU communication, while some PCIe desktop variants do not. Confirm the exact model/variant if NVLink is required.

9. How does the 32 GB memory benefit training?

32 GB of HBM2 memory allows training larger models and larger batch sizes without needing model parallelism or frequent CPU-GPU transfers. This reduces data movement overhead and enables more efficient experimentation and faster convergence for many models.

10. What level of performance improvement can I expect over CPUs?

Performance gains vary by workload and precision, but Volta Tensor Cores and many-core CUDA execution can provide orders-of-magnitude speedups for matrix-heavy deep learning tasks compared with CPU-only execution. Real-world speedups depend on model, batch size, and software optimization.

11. Is ECC memory supported?

Volta-based datacenter-class cards, including V100 variants, generally support ECC for increased reliability on memory operations. Verify the specific product listing or vendor datasheet to confirm ECC support for the particular unit you purchase.

12. What operating systems are supported?

The V100 is supported under major operating systems commonly used for training: Linux (typical datacenter/desktop distributions) and Windows. Linux distributions are most commonly used in production and research environments; ensure driver compatibility for your OS version.

13. What warranty and support are provided?

The product description lists a one-year warranty. For extended warranty, enterprise support, or RMA details, check with the seller or NVIDIA-authorized reseller from whom you purchase the card.

Latest Order Arrivals

Discover our latest orders

12 Heads Embroidery Machine

Dewatering Pump Machine

Order Collection

Portable Water Drilling Rig

Order Usefully Collected

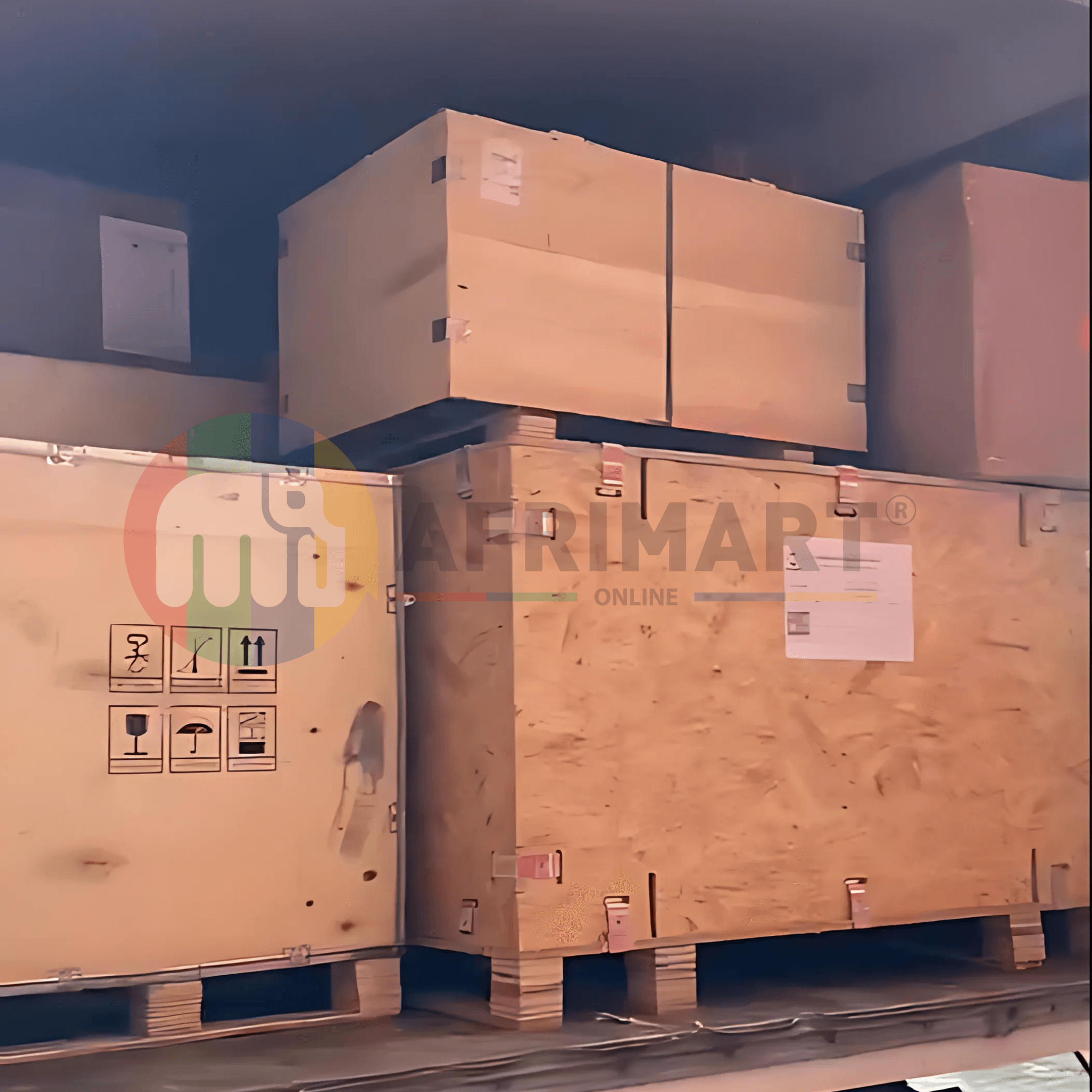

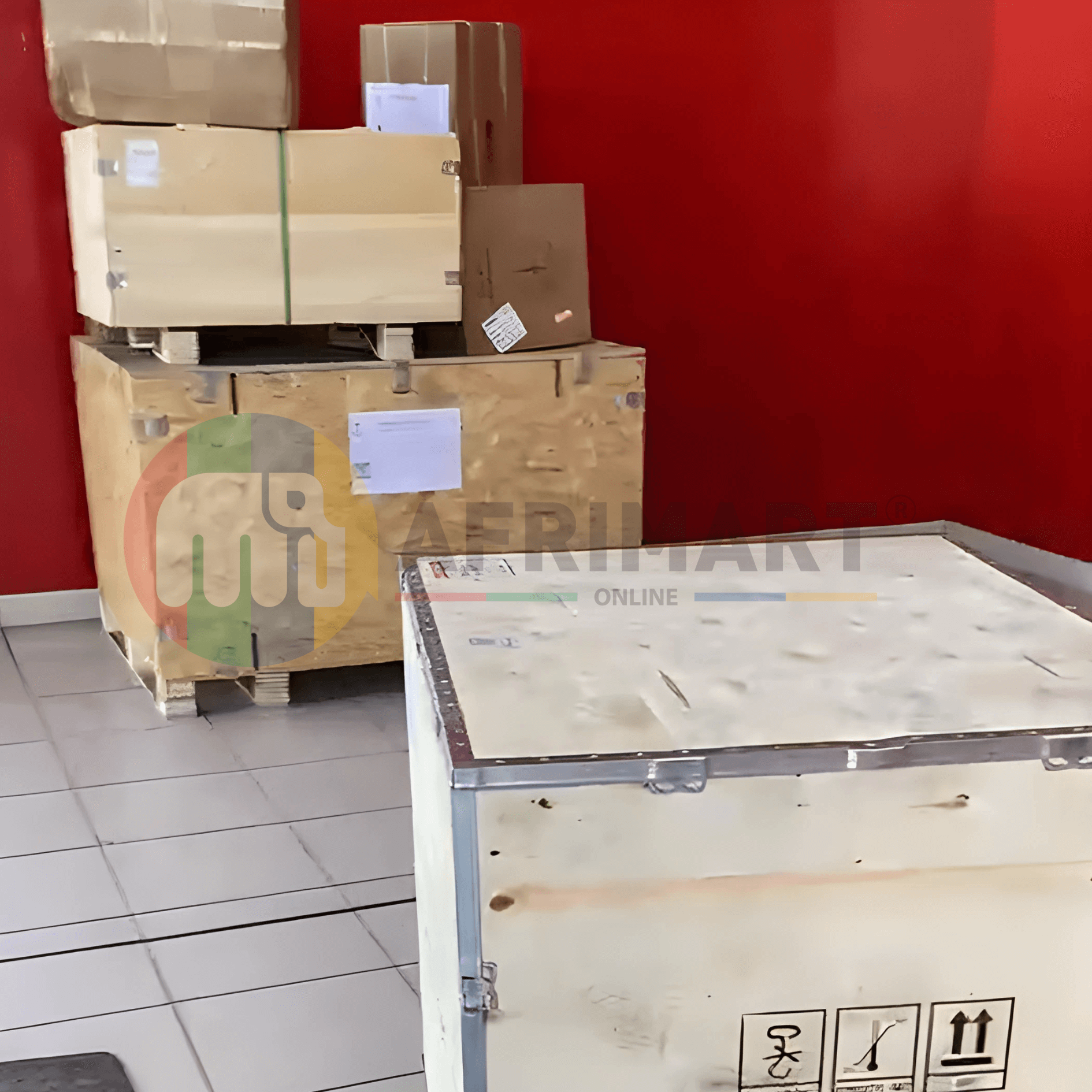

Batch of Orders

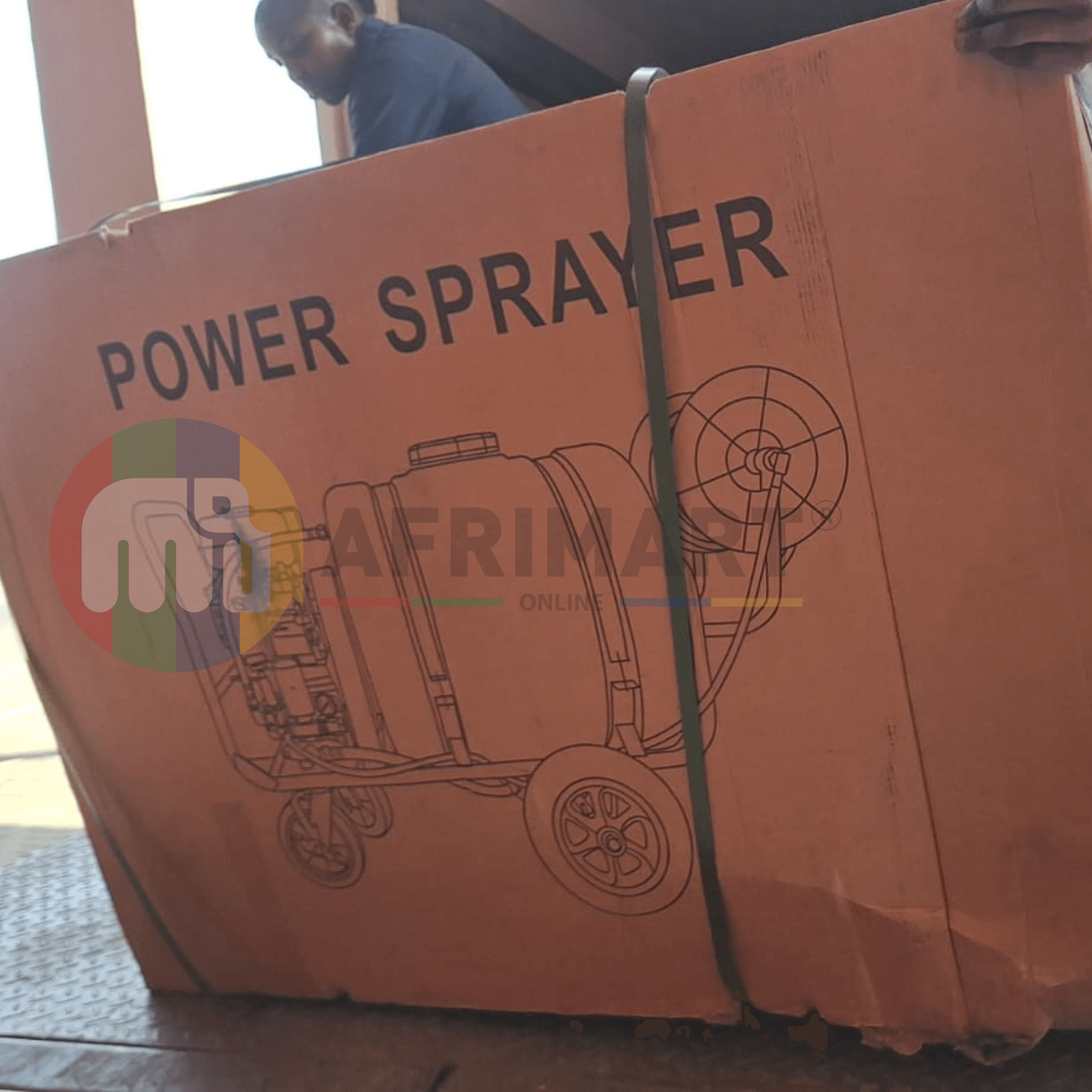

Agriculture Processing Machines

Meat Grinder Machine

Water Pump Equipment

Packaging Machine and accessories

Fabrics Manufacturing Equipment

Mining Equipments

Food Processing Machine

Batch of Orders

Batch of Orders

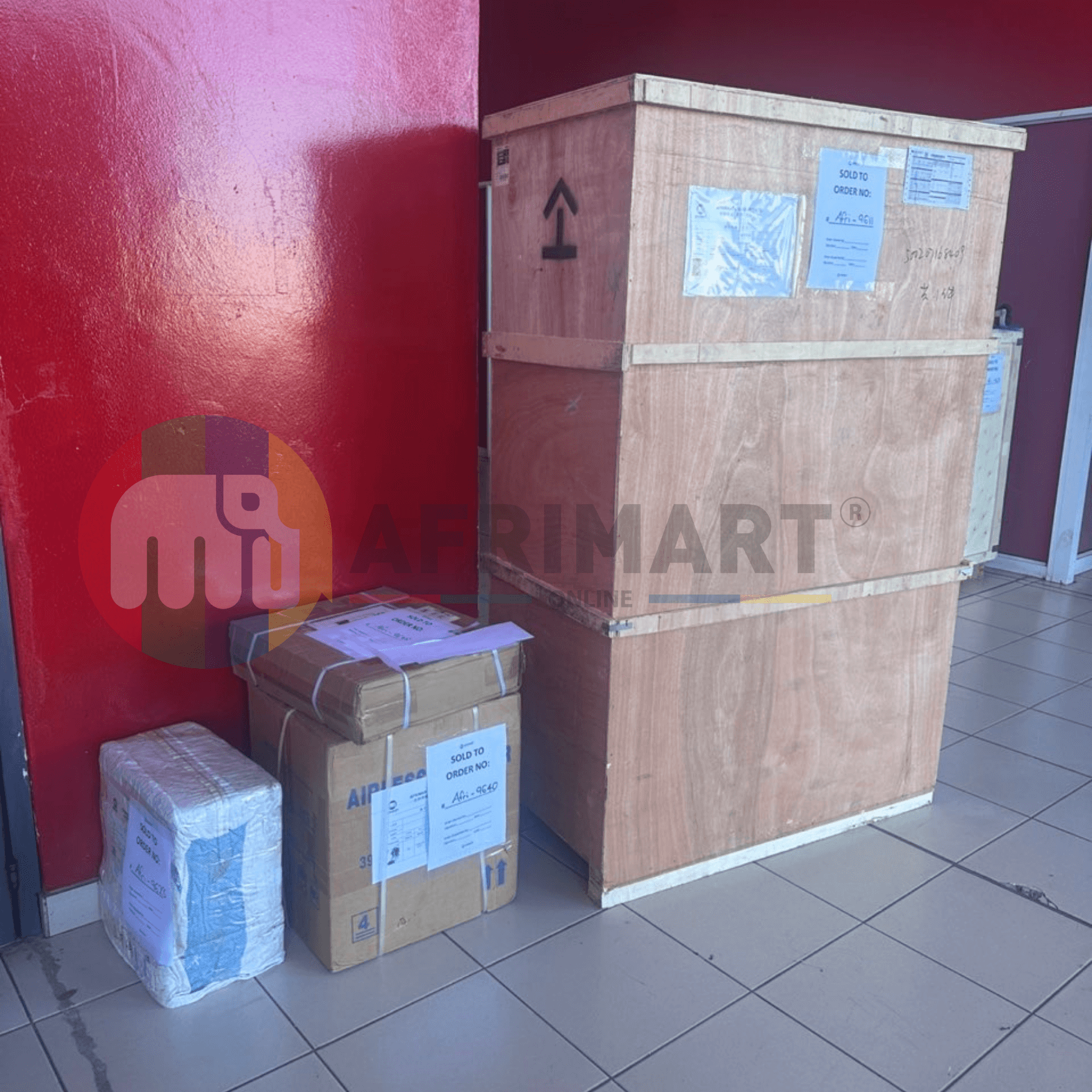

Latest Orders Labelled

wheel alignment machines

new arrivals

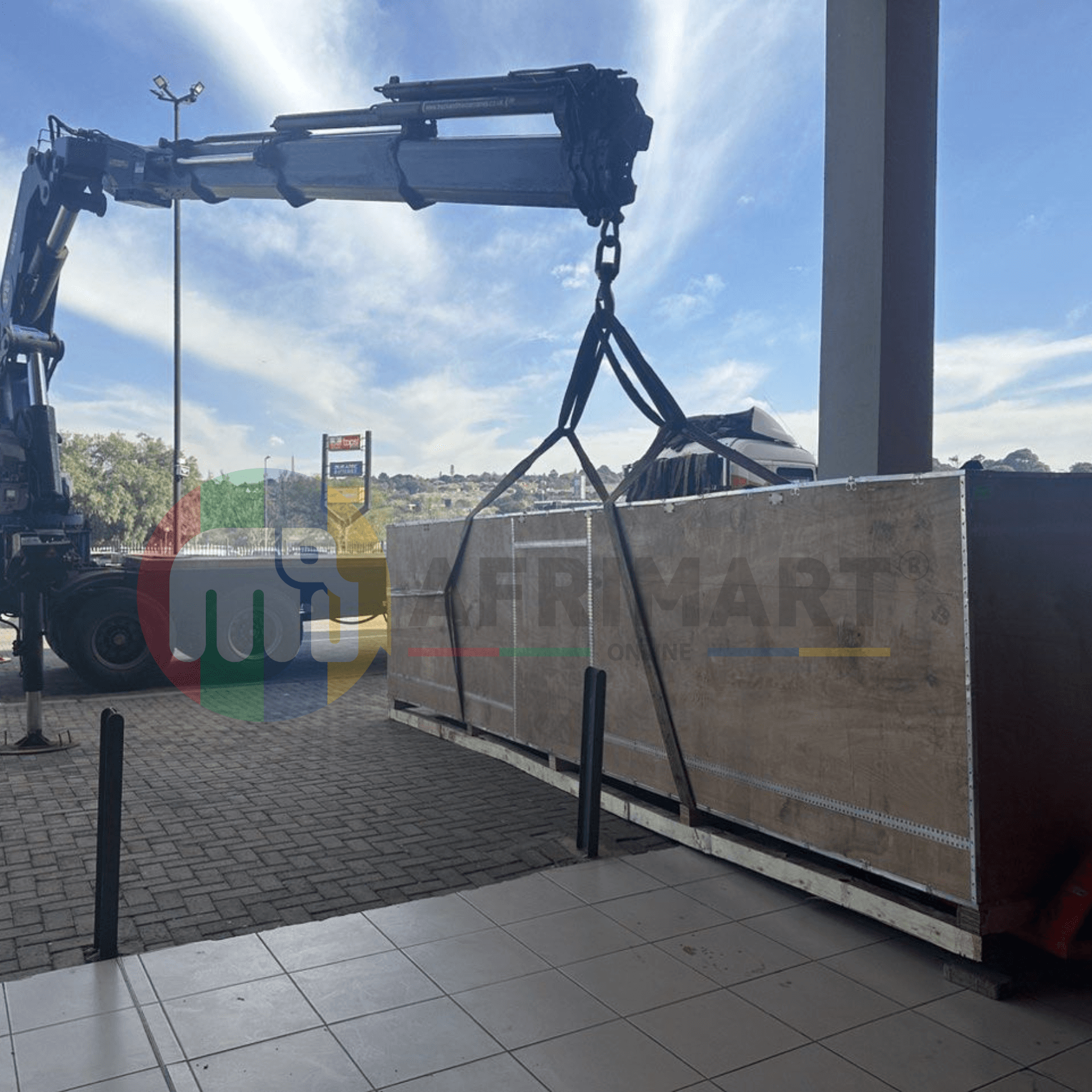

Pre Orders Offloading

Latest Arrivals

Latest Arrivals

Latest Arrivals

26 January 2026

Toilet paper making machine

Toilet paper making machine

Toilet paper Rewinding Machine

latest arrivals

offloading

order success

order collection

order offloading