B21, China Town Mall, Midrand

NVIDIA Turing Design T4 16GB GPU for AI Graphics

- Section : Computers

- Category : Graphic Cards

- SKU : 1600511087890

- Shipping Timeframes: All orders are processed within 2-5 business days (excluding weekends and holidays). After your order has been processed, the estimated delivery time is before 22 Apr, 2026, depending on customs, Please note that due to high demand, some items may experience longer shipping times, which will be communicated at order confirmation email.

- Order Processing Time: Please allow 2-5 business days for us to process your order before it is shipped . Orders placed after 16:00 on Fridays, or during weekends and public holidays, will begin processing on the next business day. Processing times may be extended during peak seasons or sales events.

- Manufacturing Time: Some products needs manufacturing time, the manufacturing process will take approximately 10-30 business days depending on the product. This timeframe may vary depending on the complexity of the product and current demand. but this will be communicated with you during order confirmation.

- Returns and Exchanges: We offer a 30-day return policy for most items. If you are not completely satisfied with your purchase, you may return it within 30 days of receipt for a refund or exchange. Items must be unused, in their original packaging, and accompanied by proof of purchase. Return shipping costs are the responsibility of the customer, unless the item was damaged or defective upon arrival.

1. What GPU architecture does the NVIDIA Turing Design T4 16GB use?

The card is based on NVIDIA's Turing architecture.

2. What are the main compute specifications (CUDA cores, Tensor cores, TFLOPS/TOPS)?

It has 2,560 NVIDIA CUDA cores and 320 Turing Tensor Cores. Performance is listed as 8.1 TFLOPS (single-precision), 65 TFLOPS (mixed-precision FP16/FP32), 130 TOPS (INT8) and 260 TOPS (INT4).

3. How much memory and memory bandwidth does this GPU have?

The GPU includes 16 GB of GDDR6 memory with 300 GB/s memory bandwidth and supports ECC.

4. Does this GPU support ECC memory?

Yes. The product specification indicates ECC (error-correcting code) is supported for GPU memory.

5. What is the form factor and interface for this GPU?

It is a low-profile PCIe card using an x16 PCIe Gen3 interface.

6. What kind of thermal solution does the card use?

The card uses a passive thermal solution, which requires adequate chassis or rack airflow provided by the host system.

7. Which compute APIs and frameworks are supported?

The GPU supports CUDA and is compatible with NVIDIA TensorRT and ONNX-based workflows; it can be used with frameworks that target those runtimes.

8. Is this GPU better for AI training or AI inference?

This Turing-based T4 is optimized for AI inference and accelerated graphics/edge AI workloads thanks to its Tensor Cores and INT8/INT4 performance. It can be used for some training tasks, but higher-end GPUs are typically preferred for large-scale model training.

9. Can I install this card in a standard workstation or server?

Yes, the low-profile PCIe form factor makes it suitable for many workstations and servers, but because it is passively cooled you must ensure the host provides sufficient airflow and that the motherboard has an available PCIe Gen3 x16 slot.

10. Does the card support NVLink or other high-speed GPU-to-GPU interconnects?

The specification lists a 32 GB/sec interconnect bandwidth and PCIe Gen3 x16 interface. NVLink is not listed in the provided specification—check the vendor or NVIDIA documentation if NVLink/multi-GPU interconnects are required.

11. What are the system requirements for power and cooling?

A specific TDP/power draw is not listed in the provided spec. Because the card is passively cooled, ensure your system or server chassis supplies adequate directed airflow and that your power supply meets the overall system power requirements. Refer to the vendor datasheet for exact power specs.

12. Can this GPU be used in virtualized or containerized environments?

Yes—since it supports CUDA and TensorRT, it can be used in containerized workflows (with NVIDIA Container Toolkit) and with GPU passthrough. For vGPU or full virtualization support, verify compatibility with NVIDIA virtualization solutions and your platform's vendor documentation.

13. What does the listed 32 GB/sec interconnect bandwidth refer to?

The spec lists 32 GB/sec interconnect bandwidth for GPU-to-system connectivity. This reflects the effective data transfer bandwidth between the GPU and host subsystem; consult the detailed datasheet for how this metric is measured.

14. Which drivers and software should I install to use this GPU?

Install NVIDIA's appropriate GPU drivers and the CUDA Toolkit for compute workloads. For inference and model deployment, install TensorRT and an ONNX runtime as needed. Always match driver and toolkit versions to your OS and framework requirements.

15. Where can I find warranty, availability, and purchasing details?

Warranty, availability, and purchase terms vary by reseller and region. Check the seller or manufacturer's product page and datasheet for warranty information, supported SKUs, and authorized distributors.

Latest Order Arrivals

Discover our latest orders

12 Heads Embroidery Machine

Dewatering Pump Machine

Order Collection

Portable Water Drilling Rig

Order Usefully Collected

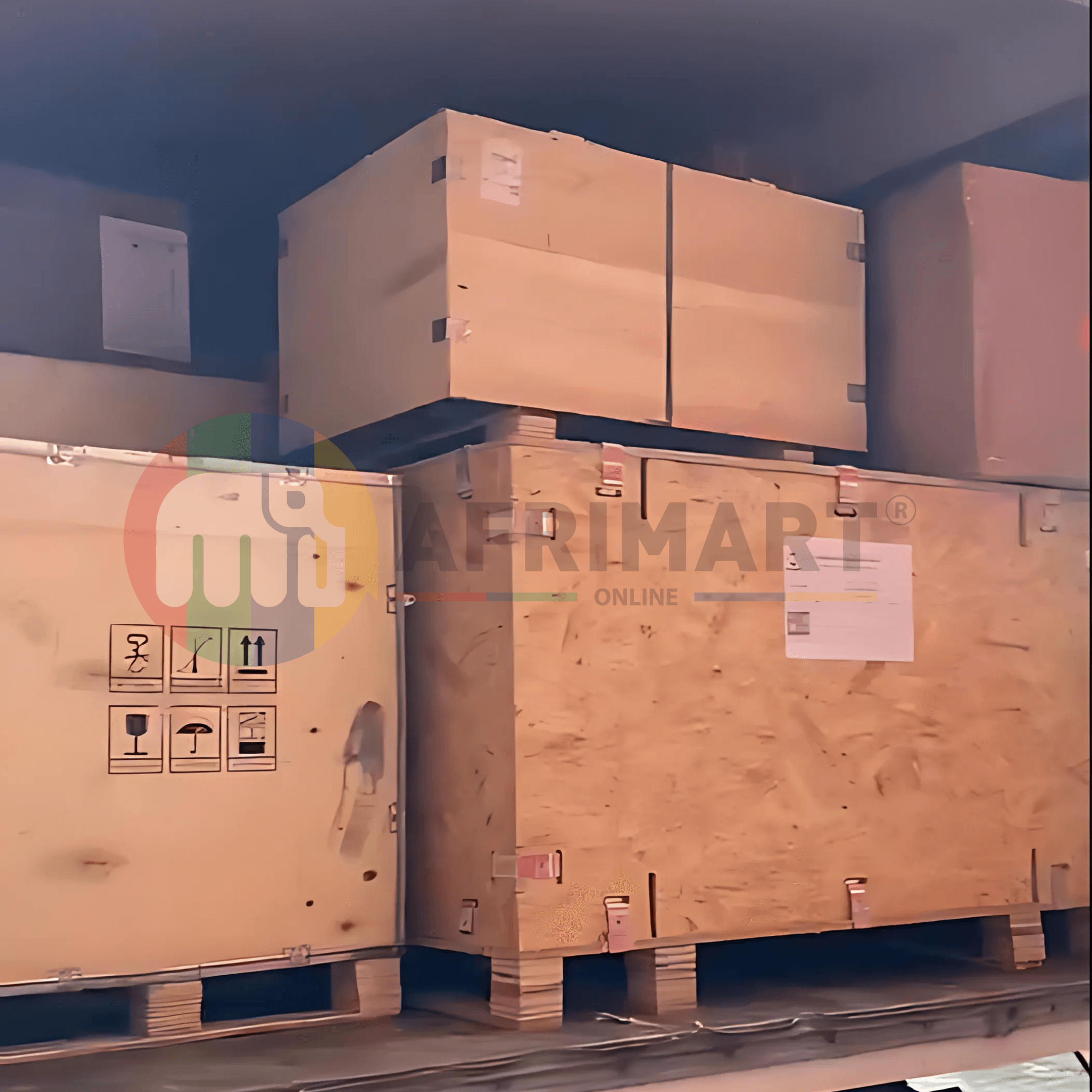

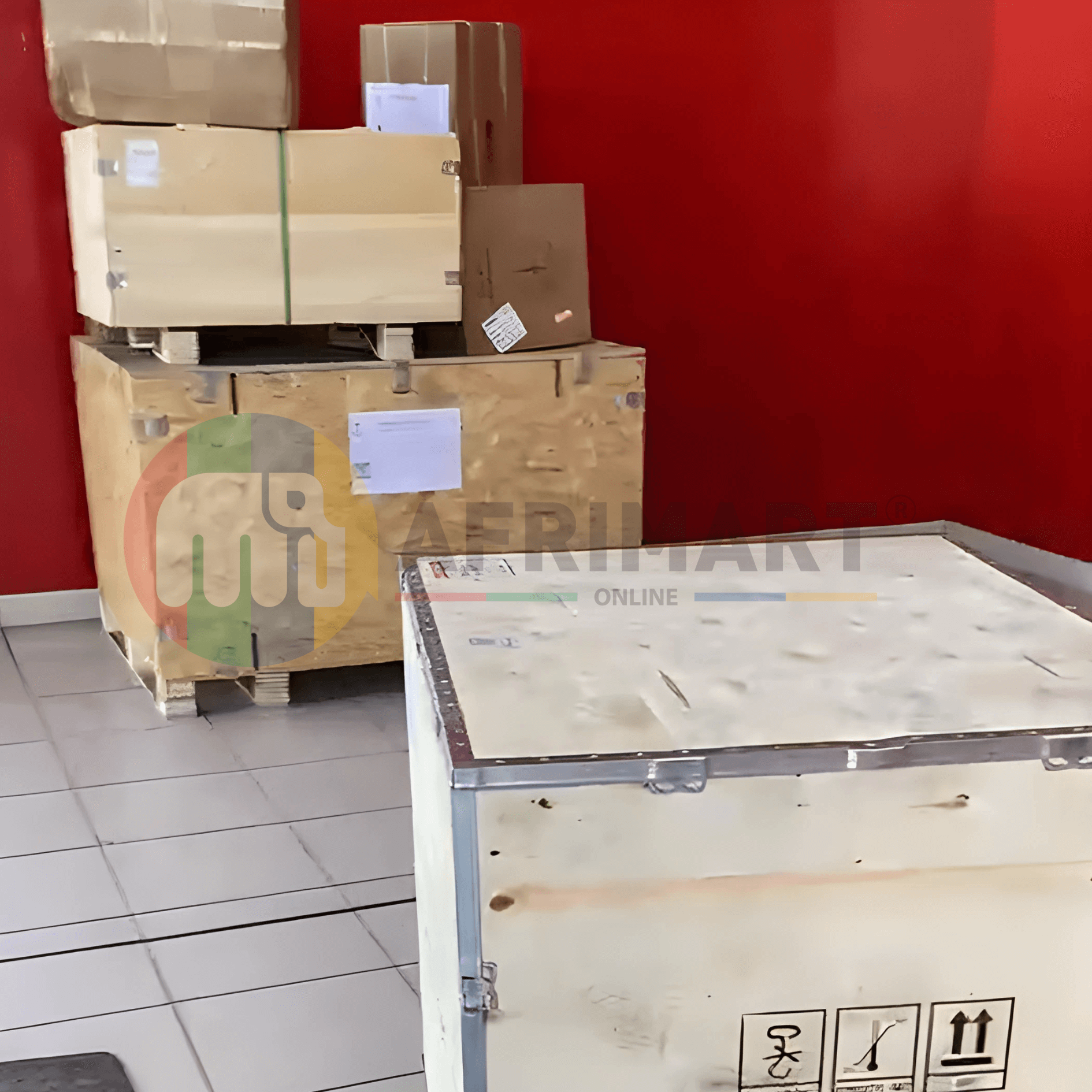

Batch of Orders

Agriculture Processing Machines

Meat Grinder Machine

Water Pump Equipment

Packaging Machine and accessories

Fabrics Manufacturing Equipment

Mining Equipments

Food Processing Machine

Batch of Orders

Batch of Orders

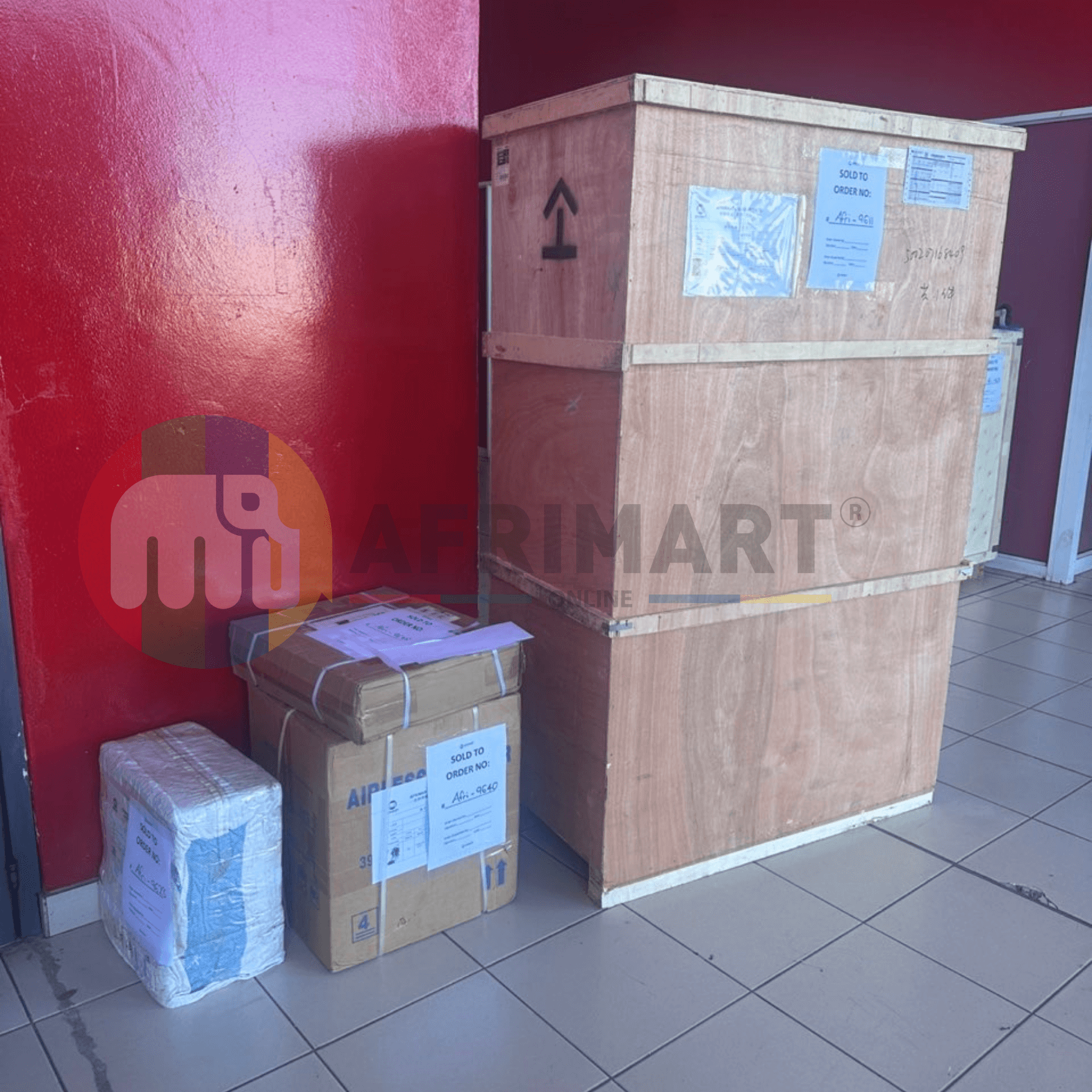

Latest Orders Labelled

wheel alignment machines

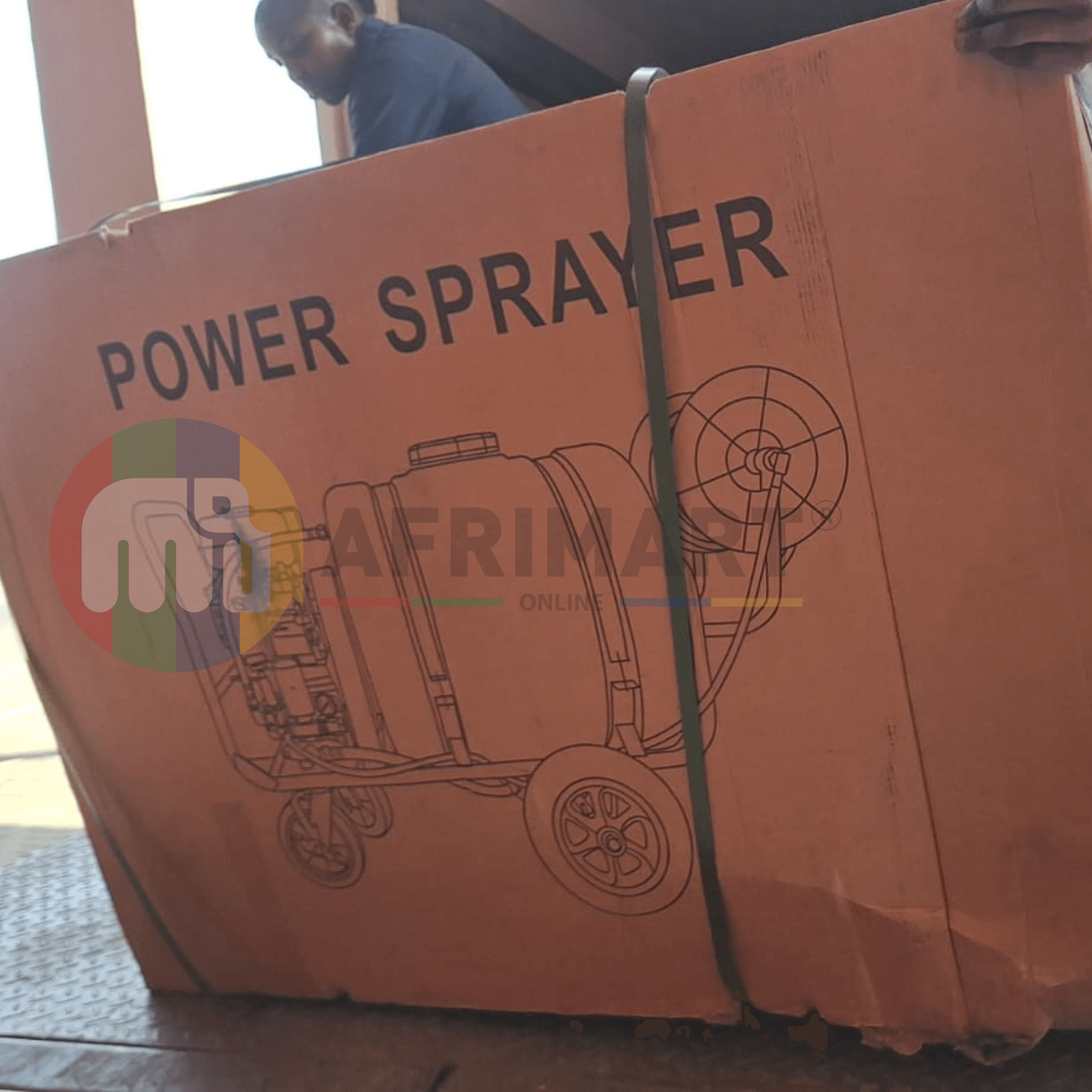

new arrivals

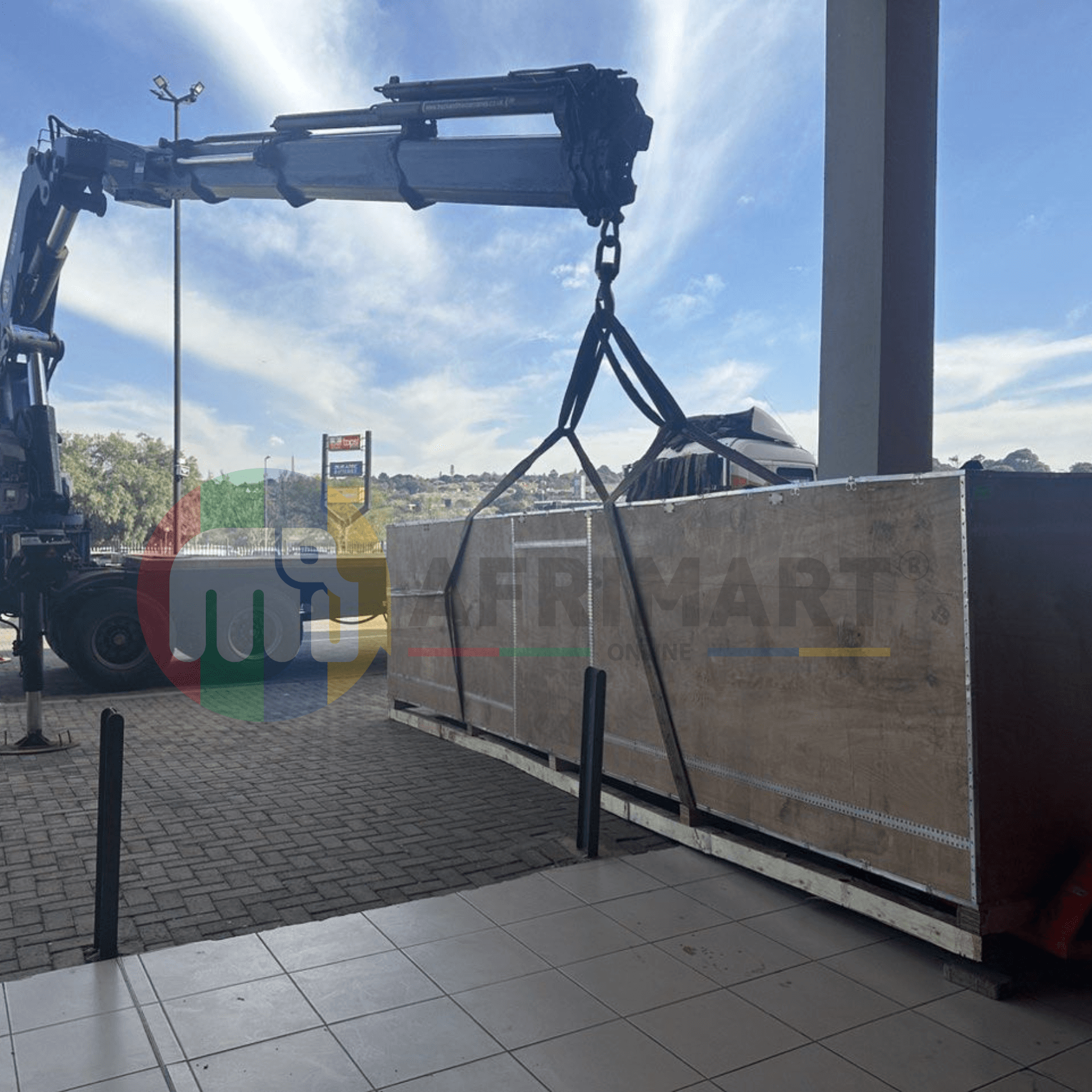

Pre Orders Offloading

Latest Arrivals

Latest Arrivals

Latest Arrivals

26 January 2026

Toilet paper making machine

Toilet paper making machine

Toilet paper Rewinding Machine

latest arrivals

offloading

order success

order collection

order offloading